Achievements

Earned the Snowflake SnowPro Core Certification through self-paced study and hands-on practice, including in-depth documentation review and completion of multiple technical Udemy courses.

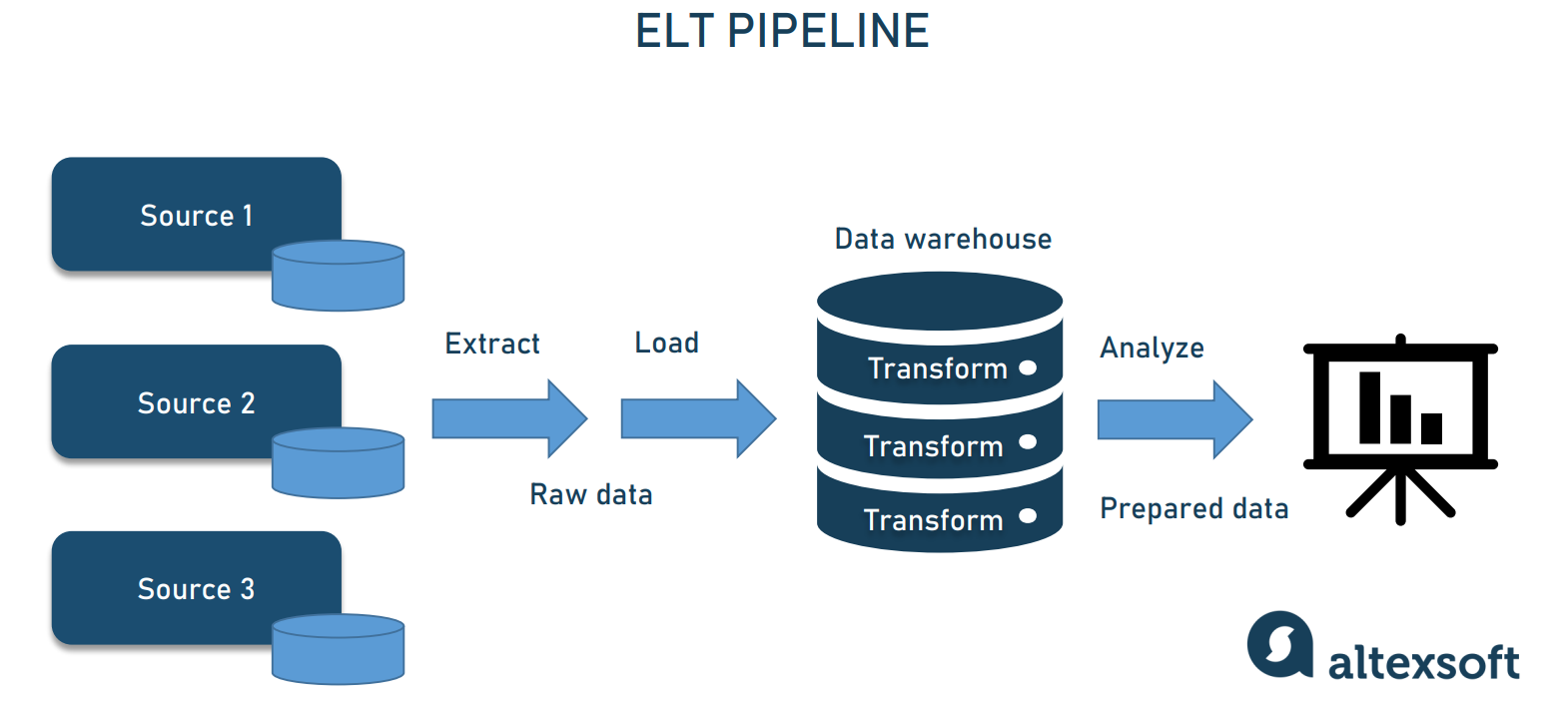

Led the successful migration of siloed client databases into a centralized GCP BigQuery warehouse, leveraging Airflow DAGs, schema standardization, and parameterized SQL templates to ensure reusable, scalable pipelines with near-zero downtime.

Designed and authored robust documentation for legacy and cloud-native Airflow pipelines, enabling faster onboarding, knowledge sharing, and long-term maintainability of data infrastructure.

Developed onboarding resources, technical guides, and live training sessions to accelerate junior engineer development and foster best practices across the data engineering team.

Implemented DRY-principled Python scripts and modular Airflow pipelines to automate recurring ETL tasks, improving operational efficiency and reducing manual intervention.

Hands-on experience working concurrently across multiple cloud environments, including AWS, Snowflake, and Google Cloud Platform (GCP), supporting diverse codebases and data workflows.

Recognized as a subject matter expert in legacy systems; reverse-engineered undocumented pipelines and modernized data workflows to ensure continuity while enhancing scalability and performance.

Completed the intensive Coding Temple bootcamp, gaining foundational knowledge in full stack development, SQL, Python, and data engineering best practices through hands-on projects.

Graduated from Wavicle Data Solutions’ internal Data Engineering Bootcamp, delivering a pipeline solution for taxi data analysis using AWS Redshift, Talend, and Python-based ETL design patterns.